How to Secure the beast (GenAI): Use cases, Security Challenges, and applicable solutions

How to Secure the beast (GenAI): Use cases, Security Challenges, and applicable solutions.

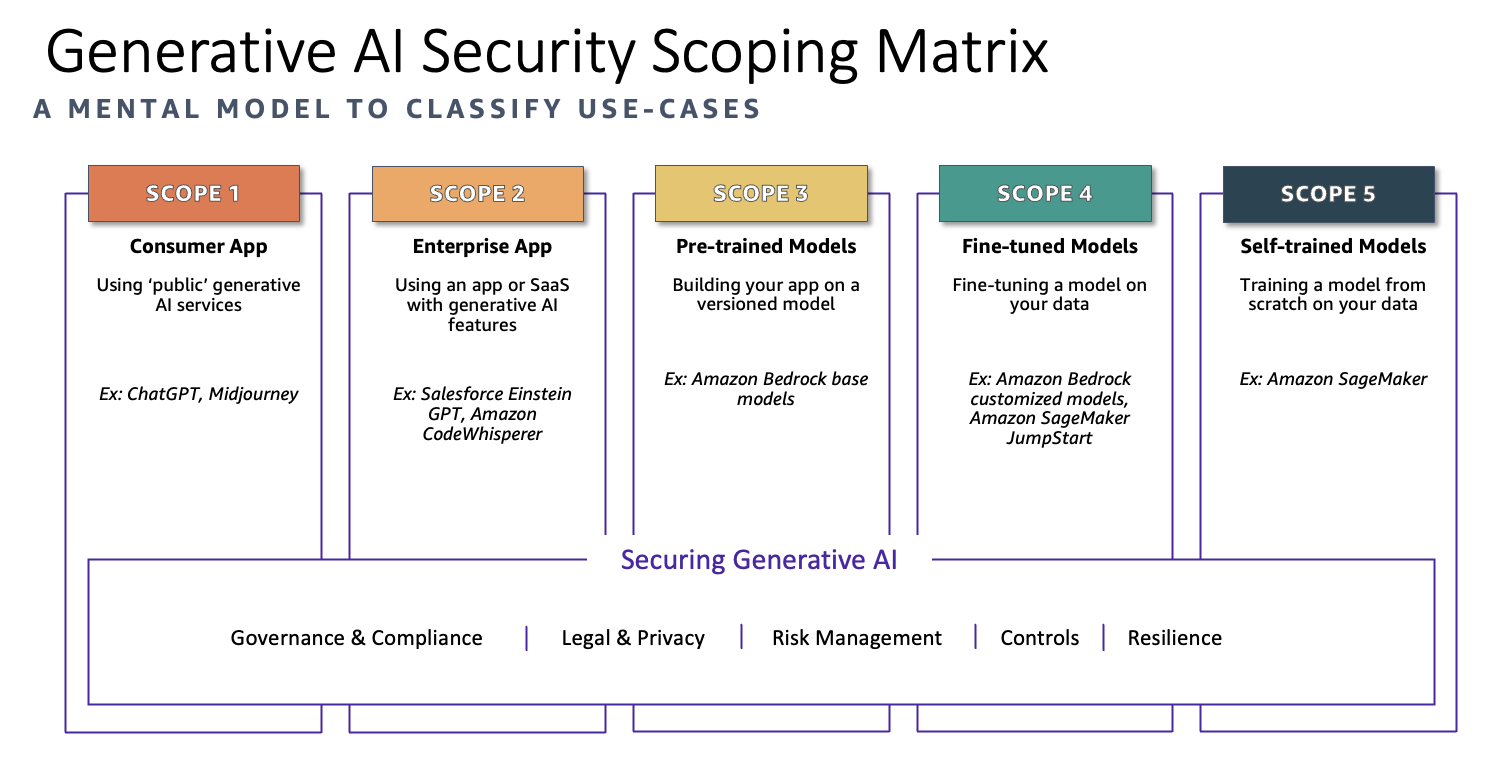

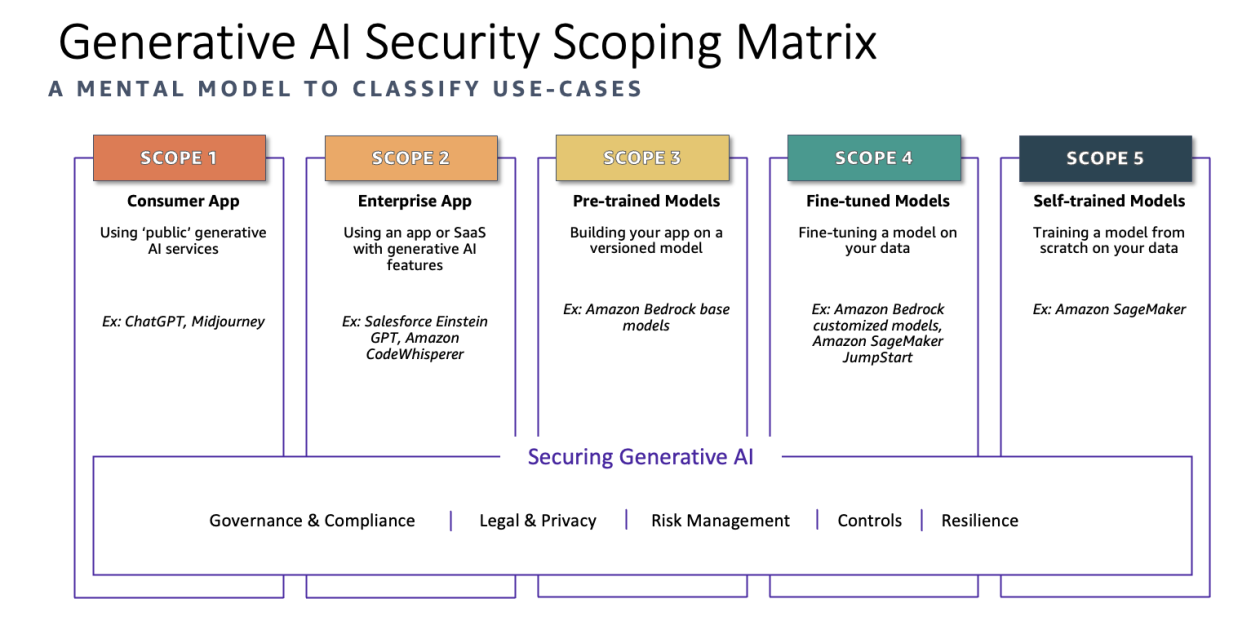

Generative AI (GenAI) is revolutionizing industries by providing smarter, more personalized, and highly efficient solutions across various sectors. From consumer chatbots to enterprise productivity tools, GenAI applications are reshaping the digital landscape. However, with this power comes an urgent need for security to protect sensitive data, prevent unauthorized access, and safeguard against emerging AI-specific threats.

In this article, we explore five core use cases for GenAI, their unique security challenges, and how to apply the OWASP Top 10 for Large Language Models (LLMs) to secure them. We’ll also highlight real-world examples to illustrate how these technologies are being implemented today.

1. GenAI powered Consumer Apps:

Use Case: Consumer apps are the front line of GenAI’s reach, bringing personalized assistance directly to users through websites, mobile apps, and digital assistants.

Popular Examples:

- ChatGPT by OpenAI: A conversational AI that helps users with tasks like creative writing, Q&A, and problem-solving.

- Snapchat My AI: A chatbot within Snapchat that provides personalized recommendations and messaging ideas.

- GrammarlyGO: AI-powered writing assistance that improves clarity, style, and even generates ideas.

- Replika: An AI companion for emotional support and friendly conversation.

Security Challenges: Consumer apps face a high volume of interactions, often involving sensitive data. Securing them requires monitoring for malicious activity, preventing unauthorized access, and avoiding content manipulation.

Applicable OWASP Top 10:

- A1. Insecure Model Access: Secure model access with API keys, OAuth tokens, and multi-factor authentication (MFA).

- A2. Training Data Poisoning: Ensure only sanitized data is fed into the model, preventing manipulated inputs that could lead to biased or harmful outputs.

- A3. Prompt Injection: Implement input validation to prevent malicious prompt manipulation.

- A4. Model Inference Attacks: Throttle and monitor API requests to detect abnormal usage that may signal inference attacks.

- A8. Unauthorized Access Control: Use role-based access control (RBAC) to restrict access to data and ensure users only see authorized content.

Think of consumer AI apps like a concierge that knows your preferences—just be sure it’s not telling them to anyone else!

2. GenAI Powered Enterprise Apps:

Use Case: GenAI applications in the enterprise sector help automate workflows, generate insights from data, and support employee productivity. These apps are like having a specialized analyst that understands your business needs.

Popular Examples:

- Salesforce Einstein GPT: Integrates AI into CRM, helping businesses automate sales tasks, generate insights, and create customer communications.

- Microsoft Copilot in Office 365: Enhances productivity in Word, Excel, and Teams by assisting with data analysis, document drafting, and meeting summaries.

- ServiceNow Generative AI: Automates workflows and customer service with conversational AI capabilities.

- Oracle Digital Assistant: Streamlines enterprise tasks like HR requests, financial queries, and supply chain management.

Security Challenges: Enterprise apps often process highly sensitive, proprietary data. Security issues such as excessive data exposure, inadequate access controls, and weak monitoring can lead to breaches with significant financial and reputational costs.

Applicable OWASP Top 10:

- A5. Excessive Data Exposure: Configure data filtering to ensure sensitive information isn’t exposed in AI-generated responses.

- A6. Insecure Model Updates: Require digital signatures and version tracking to monitor model updates and prevent tampering.

- A7. Insufficient Monitoring: Enable real-time monitoring to detect suspicious activity and ensure data security.

- A9. Inadequate Security Configurations: Use secure configurations, like cloud containerization, to isolate LLM processes and limit data exposure.

- A10. Supply Chain Vulnerabilities: Regularly vet third-party tools and libraries to minimize potential vulnerabilities.

Integrate auditing tools to monitor data access and tampering, and enforce strict role-based access controls to protect sensitive areas.

Enterprise GenAI is like a trusted employee that’s “in the know”—but keep it from wandering around unsupervised!

3. GenAI - Pre-Trained Models: Ready-Made Intelligence, with Caveats

Use Case: Pre-trained models offer a fast and flexible foundation for various applications, providing baseline intelligence without the need for specialized training. They are often used as the backbone of general-purpose AI tasks.

Popular Examples:

- BERT (Bidirectional Encoder Representations from Transformers) by Google: A widely used NLP model for tasks like text classification and sentiment analysis.

- GPT-3.5 by OpenAI: Known for its versatility, it’s used across applications for tasks like summarization and conversational AI.

- CLIP (Contrastive Language-Image Pretraining) by OpenAI: A multimodal model used in image recognition and text-to-image applications.

- Whisper by OpenAI: Used for speech-to-text tasks, suitable for transcription and multilingual audio applications.

Security Challenges: Pre-trained models carry the risk of embedded biases and vulnerabilities, given that they’re trained on large, public datasets. They require strict access control and regular monitoring to prevent excessive data exposure and prompt injections.

Applicable OWASP Top 10:

- A4. Model Inference Attacks: Implement access controls, like throttling and blocking repeated requests, to reduce inference attacks.

- A3. Prompt Injection: Apply strict input validation to prevent potential manipulation.

- A6. Insecure Model Updates: Keep pre-trained models updated from trusted sources, ensuring vulnerabilities are patched.

- A7. Insufficient Monitoring: Log and track access to monitor for abuse or abnormal patterns.

- A5. Excessive Data Exposure: Limit response generation to prevent inadvertent exposure of sensitive data.

Applying rate limiting and CAPTCHA can help reduce brute-force attacks and restrict prompt injection.

Pre-trained models are like a prepackaged dinner—they’re convenient but double-check the “ingredients” before serving!

4. Fine-Tuned Models: Tailoring for Specific Needs

Use Case: Fine-tuning involves customizing a pre-trained model with specific data, creating highly specialized solutions for particular industries or use cases, like healthcare or finance.

Popular Examples:

- MedPaLM 2 by Google DeepMind: Fine-tuned for medical question answering, tailored for healthcare applications.

- BloombergGPT: Fine-tuned to interpret financial data and generate reports for the finance industry.

- CodeT5 by Salesforce: Tailored for coding tasks like code generation, bug detection, and documentation.

- LegalBERT: An NLP model for legal language, assisting with case law analysis and contract reviews.

Security Challenges: Fine-tuned models often require sensitive industry data for training. Protecting this data from unauthorized access and exposure is critical.

Applicable OWASP Top 10:

- A2. Training Data Poisoning: Only use trusted, sanitized data to prevent corruption of the model.

- A1. Insecure Model Access: Enforce strict access controls to prevent unauthorized interaction with the model.

- A5. Excessive Data Exposure: Apply techniques like differential privacy to minimize information leakage.

- A9. Inadequate Security Configurations: Use isolated environments during fine-tuning to prevent exposure.

- A8. Unauthorized Access Control: Regularly review access controls to ensure only authorized personnel can interact with the model.

Implementing secure environments for fine-tuning and applying differential privacy techniques can help protect sensitive data.

Fine-tuned models are like a bespoke suit—perfectly tailored but handle with care!

5. Self-Trained Models: Custom AI for Unique Requirements

Use Case: Self-trained models are built from scratch, typically using proprietary data to meet an organization’s unique needs, such as autonomous driving or voice recognition tailored to specific dialects.

Popular Examples:

- Tesla’s Self-Driving Model: Tesla has developed its own self-driving AI, trained using data from millions of miles driven by Tesla vehicles. This data enables continuous improvements in the model’s understanding of real-world driving conditions.

- Facebook’s Speech Recognition Models: Meta (formerly Facebook) custom-trains speech recognition models to accommodate various languages and dialects, optimizing for diverse speech inputs across its platforms.

- Stability AI’s Stable Diffusion: Stability AI developed the Stable Diffusion model for high-quality image generation, training it on a vast dataset to support open-source applications for art and creativity.

- John Snow Labs’ Spark NLP for Healthcare: This model is trained on healthcare-specific language, making it highly accurate for extracting and analyzing clinical data in medical applications.

Security Challenges: Self-trained models require extensive data security and robust control measures throughout their lifecycle, making them highly attractive targets for attackers. Protecting proprietary data and ensuring model integrity is crucial.

Applicable OWASP Top 10:

- A10. Supply Chain Vulnerabilities: Vet all dependencies thoroughly, as self-training typically relies on a wide range of external libraries and tools.

- A2. Training Data Poisoning: Use only validated, sanitized data to prevent malicious inputs from corrupting the model.

- A4. Model Inference Attacks: Implement access control mechanisms, such as rate limiting and anomaly detection, to prevent data extraction through inference attacks.

- A9. Inadequate Security Configurations: Apply hardened configurations to safeguard the model, isolating training environments to reduce exposure.

- A8. Unauthorized Access Control: Encrypt sensitive data at rest and enforce strict access control policies for both training and production environments.

Building and deploying self-trained models in isolated, secure environments with regular audits and encryption helps mitigate risks associated with unauthorized access and data tampering.

Self-trained models are like handcrafted furniture—one-of-a-kind creations that deserve robust protection!

References:

-thesecguy